Nowadays I see people going crazy about automation. Everything must be in Docker containers, If it’s not in docker, I ain’t touch it.

Every task must be performed on the CI server, every repetitive task must be converted to a script, nothing in the MVP can the back office do manually. People are praised when they claim: “automate everything”!

I am on the very opposite side. I think automation generally isn’t worth it. It can be harmful to your project and see the trend of “automate everything” kinda crazy. Let me explain why.

It ain’t gonna automate itself

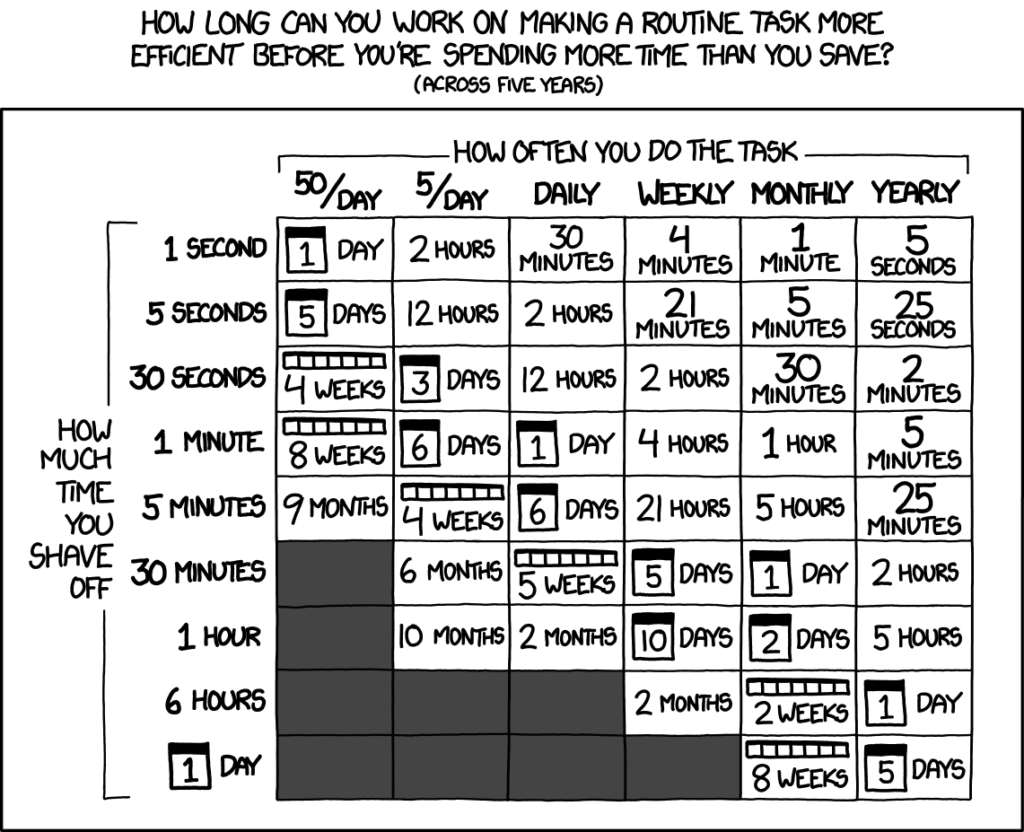

When it comes to automation, the rule of thumb goes: you don’t want to spend more time on automation than it can save you.

Let’s see this in practice. Let’s compare a brand new bare Rails server versus the same Rails server dockerized. How much time does dockerizing save?

- When it comes to starting a project,

rails newfollowed byrails sworks faster than doing the same inside docker. Docker requires more time to set config files up, insignificantly (everybody can look it up) but still. For the beginning of the project, let’s assume a tie. - Things get more complicated when we add more dependencies (Postgres, Redis, gems, whatnot). Either in docker or outside, the setup takes time. My rough observation – the “outside docker” setup takes X hours, and in docker it takes the same. Docker does not save time when introducing dependency, but later. Tie.

- For builds, there is no difference. Assuming you have CI server, you have to write build script in docker, or in the CI directly. Tie.

- For a so-called new dev, the docker could make a difference, though. Instead of assembling or guessing information “how to build”, they should be able to build with one command, and voila! How much time is saved? 10 minutes.

- To be fair, we shouldn’t count only new developers. “Old” devs happen to be the “new devs” from time to time – when they mess up with the project, move to a new machine, etc.

Quick math, assuming 1 “new” dev a month every month, dockerization saves two hours a year. How long will your project last? Two years? Can you dockerize your project & dependencies within 4 hours?

I know what you’re thinking. The numbers are made up. That’s true, they’re based on my honest experience, but I’ve never measured it.

For many devs, the numbers feel very pessimistic. My colleagues had radically different perception – dockers save hours if not days. Good for them. From my point of view, it’s wishful thinking. We want things to smoothly automate, but in reality, it happens very rarely.

Why do we have different perceptions? Because we’re talking about perceptions, not number.

Metrics are lacking. Maybe I’m terribly wrong on how much time docker containers can save you. Because of that, I might underestimate the power of automation. But I don’t see the other side having metrics as the argument, either. Honestly, I have never seen numbers in discussion “is it worth automating or not”. Without numbers, I’m skeptical if it’s worth it.

“I’m skeptical” doesn’t mean I always turn it down. Even though I have never measured, I can still feel some automation saving time. Namely two:

- Automated tests

- Continuous Integration servers

Most other automation I generally see as a caprice.

The lack of metrics and my bad experience with automation make me very skeptical about automation. However, there’s more.

The cost of maintenance

The upfront cost of the automation (it ain’t gonna automate itself) is one thing. Another thing is that automation needs to be maintained.

I strongly recommend reading The Law of Leaky Abstractions. I like the flow of the post, examples, and conclusions. That post splendidly explains my concerns about the cost of maintenance.

Let’s discuss three ideas from that post, in the context of automation:

1. All non-trivial abstractions, to some degree, are leaky.

That’s the title Law of leaky abstractions.

Speaking of automation – it’s impossible to write automation that is 100% bulletproof. The wet dream of all the “automators” – write a script once, and it works every single time to every single body. This isn’t reality.

In reality, every automation breaks sooner or later – doesn’t work on somebody’s computer, some OS, some strange network occurs, etc.

My favorite case – considering only a happy path in automation. How many times did you wonder why the CI failed?

What happens when the automation leaks? We can either let it be leaked or fix it.

When we let it be, the automation gets worthless. The automation is there and takes your attention and cognitive load, but doesn’t serve its purpose. From my experience, leaky automation is close to no automation at all.

On the other hand, when we make developers fix it, it adds more burden on developers to maintain the automation. Yikes.

I recommend to radically accept the law of leaky abstractions as a law of physics, and take it into account when you consider your next automation.

2. Even as we have higher and higher level programming tools with better and better abstractions, becoming a proficient programmer is getting harder and harder.

Because all automation are leaky, they add to tech stack. Automation, that was supposed to abstract a process, becomes yet another step in it.

A metaphor that spoke to my colleague’s heart: when you automate pushing 5 buttons with another button, you wish to only work on that one button. Instead, as all automations are leaky, you now have 6 buttons to take care of.

In my experience, projects consist of multiple layers of automation that we hope to never need to learn. Among them, there’s a very thin layer of what the project is about. Eventually, it can be not clear what the project is actually about – do we develop features or handle automation?

I sincerely think this has an impact on our velocity. It can take a really long time to do very little change, and the reason is not the scope of change, but all the linked automation.

What does it tell about us in the eyes of business? As a client, I would get upset when my IT department needs days to do any change, because they need to maintain a huge stack of technologies of their choice.

The fewer layers you operate on, the better you can learn them. Devs not knowing what’s under all the automation can harm your business.

My experience is that the vast majority of production issues aren’t “my” code, but “their” code that I didn’t understand or take time studying. Which leads us to another point.

3. The abstractions save us time working, but they don’t save us time learning

We shouldn’t think of automation as a way to avoid learning. Instead, think of automation as another book you need to read. With that in mind, you should be very picky about what layer you really need.

If you’re not careful about it, you easily end up with 20 layers of automation of servers, tools, languages, libraries and all that kind of “magic”. Learning the whole stack is becoming impossible.

What to do instead?

You have to automate without releasing your people from the obligation to learn. My proposition is:

I’m a huge fan of visibility in IT and consider this as the most efficient way of communicating. Visibility can be useful here as well. Leverage it and do what real businesses do with their processes:

- Write down a flow chart of the whole process.

- Make it clear to everybody what each step means. Brainstorm what could be improved in the flow. Identify your pains.

- Think if the process is worth automating. Maybe to get rid of your pains, you can automate only a part of it?

- Consider also deleting or eliminating the process at all (or parts of it).

See how automation is only one of the possible solutions?

Leave a Reply