This is the most important, yet the most unspoken trait of good tests. Tests should be the oracle.

If all tests are green – the app will work on prod. If at least one test is red – the app won’t work.

Think Continuous Delivery. Every step in the process has to be automated. When tests are green, there is no room for extra human checks before going prod. The decision (deploy – no deploy) is handed over to your tests.

Do you trust your tests this much? Is this even possible to be this confident about tests?

What test tests?

We are used to thinking that tests prove the correctness of the code, or more precisely: disprove its falseness.

A natural question that springs to mind is: what proves the correctness of the tests themselves?

“You should write tests for your tests, he he xD” – that’s a common reaction. It fails to amuse me. First of all – tests for your tests do exist (see mutation tests) but I’m not a fan.

There is a better way to verify your tests. It’s infallible and very personal to each project.

This way is the reality check.

Reality check

Deploy your app to prod and see if it does the job.

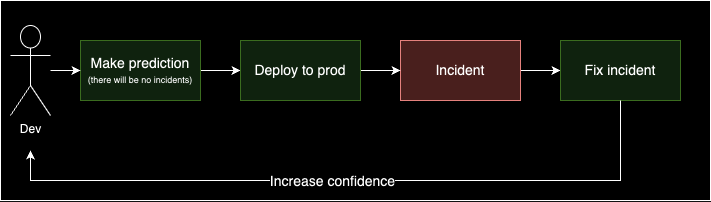

Because programming is hard and because you have to keep delivering, inevitably – there is an incident (think a bug or a crash; the broader the better).

The incident means a false positive from your tests. Tests are supposed to catch all the incidents. The oracle has failed.

In other words, false positives disprove the correctness of the tests.

Your app has many incidents? Your tests are bad.

Your app has no incidents? Your tests are good enough. For now (until the next incident).

That’s what I envision when I think of “tests test your code and code tests your tests“.

No-false-positiveness is king

No-false-positiveness (for the lack of a better word) is the most important trait of a test suite.

Yes, tests should be as fast as possible. But if your fast tests lead to false positives – are they useful? Class tests (often wrongly called unit tests) tend to be extremely fast but do not catch integration errors. Not a fan.

Yes, code coverage is nice. But 100% coverage doesn’t guarantee no-false-positiveness. In contrast, code with 20% can give perfect confidence (eg. when a critical path is tested in and out, and the rest of the app never causes a problem).

Yes, tests should precisely tell you where the problem is. Class tests can do that, but often you sacrifice no-false-positiveness to get there, which isn’t the best of deals.

You can see a pattern here. I’m not a fan of class tests. A test suite that only consists of class tests has many traits but no-false-positiveness isn’t one.

No-false-positiveness builds confidence

Hopefully, nobody deploys the app knowing it will cause incidents. Rather the incidents are surprising. At least I want to believe so.

If you have a sane sense of confidence, it is proportional to the amount of incidents that have happened recently.

You shouldn’t feel confident if every deploy leads to many incidents.

On the other hand, if your app has few incidents, your tests are good, even if, for example, they are written in an unorthodox manner.

If there are incidents – fear not. Fix it and adapt the tests. Mend the oracle. Every fixed incident should increase your confidence.

Funny thing: if you have a strong feedback loop, you can start with any code and tests and you’ll end up with perfect code and tests.

The opposite is also true. No matter how perfect your product is, poor feedback loop will ruin it.

Sounds trivial?

I may sound too trivial. Inspect and adapt, right?

But there is a reason why I write this article. This is already too difficult to do for many devs.

I’ve seen many examples of people trying to negotiate with reality. People writing “perfect test suite” but resulting in more and more incidents, yet refusing to abandon their beliefs.

Picture this – the tests has 100% code coverage. Plenty of class tests. 100% pass. App doesn’t work.

Are these good tests?

I’ve met many devs who say yes. How would you explain the goal of your test suite if you answered “yes”?

Don’t want to be right. Let your prod tell you the truth, that’s all you need.

100% confidence?

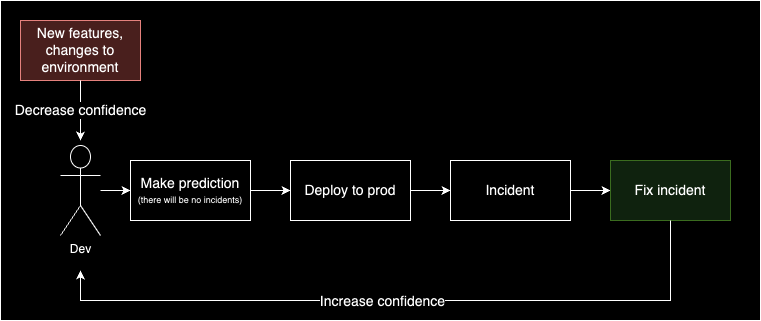

I don’t think 100% confidence is achievable. The reason: you can’t just freeze your app, find out all the incidents, and fix them all.

Because you have to deliver new features. Also, the environment (libraries, cloud) evolves. It obscures the whole learning process.

You’ll never reach 100%, even if there wasn’t an incident for a long time. There are too many cases to cover and product.

It’s a process. and you should accept this. Work iteratively. Don’t be 100% confident but try to reach it.

It’s no-false-positiveness first, not tests

I would not care about tests if I ever saw a better way to ensure no-false-positiveness.

Tests are automatic and deterministic way to:

- prove the correctness of the new code

- prove no regression

That is a huge confidence boost when it comes to predicting no incident. The day I find a tool that gives me all that confidence is the day I quit bitching about writing good tests.

Summary

- I want to write tests so that they produce no false positives. I aim for the infallible oracle.

- This is the only measure of good tests.

- You should build your confidence on the reality check of the app. Otherwise, you’re being unrealistic.

- Programming is hard, you won’t get 100% confidence. Reality will try to ruin it for you. But keep trying.

- Count false positives and reduce to zero. That’s all it takes

In the next article I shared concrete ideas on how to write tests that give more confidence.

0 Comments

1 Pingback